20 Constructing derivatives

This chapter shows to use the computer to construct the derivative of any1 function. This is easy because the task of constructing derivatives is well suited to the computer.

We will demonstrate two methods:

Symbolic differentiation, which transforms an algebraic formula for a function into a corresponding algebraic formula for the derivative.

Finite-difference methods that use a “small” \(h\), but not evanescent.

sec-prod-comp-rules covers the algorithms used by the computer to construct symbolic derivatives. Why teach you to do with paper and pencil the simpler sorts of problems that the computer does perfectly? One reason is that it helps you to anticipate what the results of the computer calculation will be, providing a mechanism to detect error in the way the computation was set up. Another reason is to enable you to follow textbook or classroom demonstrations of formulas which often come from working out a differentiation problem.

20.1 Why differentiate?

Before showing the easy computer-based methods for constructing the derivative of a function, it is good to provide some motivation: Why is differentiation so frequently in so many fields of study and application?

A primary reason lies in the laws of physics. Newton’s Second Law of Motion reads:

“The change of motion of an object is proportional to the force impressed; and is made in the direction of the straight line in which the force is impressed.”

Newton defined used position \(x(t)\) as the basis for velocity \(v(t) = \partial_t x(t)\). “Change in motion,” which we call “acceleration,” is in turn the derivative \(\partial v(t)\). Derivatives are also central to the expression of more modern forms of physics such as quantum theory and general relativity.

Many relationships encountered in the everyday or technical worlds are more understandable if framed as derivatives. For instance,

- Electrical power is the rate of change with respect to time of electrical energy.

- Birth rate is one component of the rate of change with respect to time of population. (The others are the death rate and the rates immigration and emigration.)

- Interest, as in bank interest or credit card interest, is the rate of change with respect to time of assets.

- Inflation is the rate of change with respect to time of prices.

- Disease incidence is one component of the rate of change with respect to time of disease prevalence. (The other components are death or recovery from disease.)

- Force is the rate of change with respect to position of energy.

- Deficit (as in spending deficits) is the change with respect to time of debt.

Often, we know one member in such function-and-derivative pairs, but to need to calculate the other. Many modeling situations call for putting together different components of change to reveal how some other quantity of interest will change. For example, modeling the financial viability of retirement programs such as the US Social Security involves looking at the changing age structure of the population, the returns on investment, the changing cost of living, and so on. In Block V, we will use derivatives explicitly to construct models of systems, such as an outbreak of disease, with many changing parts.

Derivatives also play an important role in design. They play an important role in the construction and representation of smooth curves, such as a robot’s track or the body of a car. (See sec-splines.) Control systems that work to stabilize a airplane’s flight or regulate the speed and spacing of cars are based on derivatives. The notion of “stability” itself is defined in terms of derivatives. (See sec-equilibria.) Algorithms for optimizing design choices also often make use of derivatives. (See sec-optimization-and-constraint.)

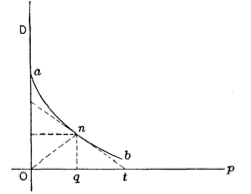

Economics as a field makes considerable use of concepts of calculus—particularly first and second derivatives, the subjects of this Block—although the names used are peculiar to economics, for instance, “elasticity”, “marginal returns” and “diminishing marginal returns.”

20.2 Symbolic differentiation

The R/mosaic function D() takes a formula for a function and produces the derivative. It uses the same sort of tilde expression used by makeFun() or contour_plot() or the other R/mosaic tools. For instance, run the code in Active R chunk lst-symbolic-sin to see the function created by differentiating \(t \sin(t)\) with respect to \(t\).

If you prefer, you can use makeFun() to define a function, then hand that function to D() for differentiation as in Active R chunk lst-define-then-differentiate:

When differentiating a function with two (or more) inputs, the function definition will have the names of the inputs on the right-hand side of the tilde expression, as in Active R chunk lst-xy-arguments-diff where the two arguments to f() are named x and y. But notice that in the use of D(), the right-hand side of the tilde expression lists only the “with respect to” variable, in this case, x.

Needless to say, D() knows the rules for the derivatives of the pattern-book functions introduced in sec-d-pattern-book. For instance,

Try the other eight pattern-book functions. For seven of these, D() produces a symbolic derivative. For the eighth, D() resorts to a “finite-difference” derivative (introduced in sec-finite-difference-derivatives). Which is this pattern-book function where D() produces a finite-difference derivative?

20.3 Finite-difference derivatives

Whenever you have a formula amenable to the construction of a symbolic derivative, that is what you should use. Finite-difference derivatives are useful in those many situations where you don’t have such a formula. The calculation is simple but has a weakness that points out the advantages of the evanescent-\(h\) approach.

For a function \(f(x)\) and a “small,” non-zero number \(h\), the finite-difference approximates the derivative with this formula:

\[\partial_x f(x) \approx \frac{f(x+h) - f(x-h)}{2h}\ .\] To demonstrate, let’s construct the finite-difference approximation to \(\partial_x \sin(x)\). Since we already know the symbolic derivative—it is \(\partial_x \sin(x) = \cos(x)\)—there is no genuinely practical purpose for this demonstration. Still, it can serve to confirm the symbolic rule.

We will call the finite-difference approximation fd_sine() and use makeFun() to construct it:

Notice that fd_sine() has a parameter, h whose default value is being set to 0.01. Whether 0.01 is “small” or not depends on the context.

To demonstrate that fd_sine() is a good approximation to the symbolic derivative \(\partial_x \sin(x)\), which is already known to us to be \(\cos(x)\), Active R chunk lst-fdsine-vs-sin plots out both functions on one graph. The symbolic derivative is plotted in magenta; the finite-difference derivative in black. The difference between them is slight, and is shown in blue

Typically when using finite-difference derivatives, you do not have the symbolic derivative to compare it to as was done in Active R chunk lst-fdsine-vs-sin. This would be the case, for example, if the function is a sound wave recorded in the form of an MP3 audio file. How would we know the finite-difference method is reliable in such a case.

Operationally, we can define “small \(h\)” to be a value that gives practically the same result even if it is made smaller by a factor of 2 or 10. Active R chunk lst-fdsine-and-h plots out the difference between the finite-difference derivatives with \(h=0.001\) and \(h-0.01\).

Look carefully at the vertical axis scale in the output from Active R chunk lst-fdsine-and-h. The tick marks are at \(\pm 0.0001\). The difference between the two finite-difference functions is practically zero. And if for some purpose even greater accuracy is required, make \(h\) even smaller. You can try this in Active R chunk lst-fdsine-and-h, say, by setting the \(h\) values to 0.0001 and 0.001. The difference between the two finite-difference derivatives will be roughly 100 times smaller.

In practical use, one employs the finite-difference method in those cases where one does not already know the exact derivative function.

In such situations, a practical way to determine what is a small \(h\) is to pick one based on your understanding of the situation. For example, much of what we perceive of sound involves mixtures of sinusoids with periods longer than one-two-thousandth of a second, so you might start with \(h\) of 0.002 seconds. Use this guess about \(h\) to construct a candidate finite-difference approximation. Then, construct another candidate using a smaller h, say, 0.0002 seconds. If the two candidates are a close match to one another, then you have confirmed that your choice of \(h\) is adequate.

It is tempting to think that the approximation gets better and better as h is made even smaller. But that is not necessarily true for computer calculations. The reason is that quantities on the computer have only a limited precision: about 15 digits. To illustrate, let’s calculate a simple quantity, \((\sqrt{3})^2 - 3\). Mathematically, this quantity is exactly zero. However, on the computer, as in Active R chunk lst-not-quite-zero, it is not quite zero:

Using the finite-difference derivatives, you can see this loss of precision if we make h too small. # small in the finite-difference approximation to \(\partial_x \sin(x)\). In Active R chunk lst-fdsine-and-tiny-h we’ve tried h = 0.000000000001. You can see the loss of precision and back off to a bigger, more satisfactory value of \(h\).

This process of using a small and an even smaller \(h\) is reminiscent of evanescent \(h\). But stop making \(h\) smaller before you see the kind of outcome in Active R chunk lst-fdsine-and-tiny-h.

20.4 Second and higher-order derivatives

Many applications call for differentiating a derivative or even differentiating the derivative of a derivative. In English, such phrases are hard to read. They are much simpler using mathematical notation.

- \(f(x)\) a function

- \(\partial_x f(x)\) the derivative of \(f(x)\)

- \(\partial_x \partial_x f(x)\), the second derivative of \(f(x)\), usually written even more concisely as \(\partial_{xx} f(x)\).

There are third-order derivatives, fourth-order, and on up, although they are not often used.

To compute a second-order derivative \(\partial_{xx} f(x)\), first differentiate \(f(x)\) to produce \(\partial_x f(x)\). Then, still using the techniques described earlier in this chapter, differentiate \(\partial_x f(x)\).

There is a shortcut for constructing high-order derivatives using D() in a single step. On the right-hand side of the tilde expression, list the with-respect-to name repeatedly. For instance, look at the functions produced in the following active R chunks:

- The second derivative \(\partial_{xx} \sin(x)\):

- The third derivative \(\partial_{xxx} \ln(x)\):

Physics students learn a formula for the position of an object in free fall dropped from a height \(x_0\) and at an initial velocity \(v_0\): \[ x(t) \equiv -\frac{1}{2} g t^2 + v_0 t + x_0\ .\] The acceleration of the object is the second derivative \(\partial_{tt} x(t)\). Use D() to find the object’s acceleration.

The second derivative of \(x(t)\) with respect to \(t\) is calculated in Active R chunk lst-dd-for-g. Run that code.

The acceleration does not depend on \(t\); it is the constant \(g\). No wonder \(g\) is called “gravitational acceleration.”

There are functions where the derivative cannot be meaningfully defined. Examples are the absolute-value function or the Heaviside function which we introduced when discussing piecewise functions in sec-piecewise-intro. sec-cont-and-smooth considers the pathological cases and shows how to spot them at a glance.↩︎